ChatGPT is a large language model that can debate moral philosophy, write short stories, and help you do your taxes.

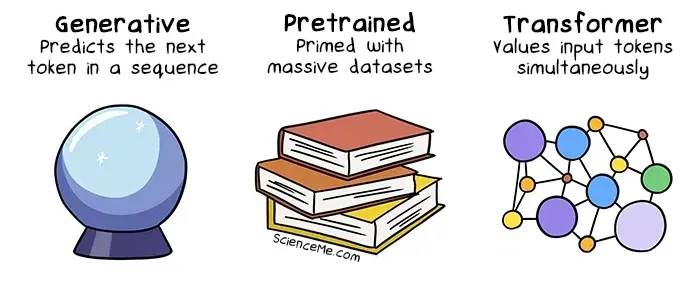

Large Language Models (LLMs) use algorithms and statistical models to analyse and infer patterns from vast amounts of data. The acronym GPT refers to ChatGPT's architecture; it (1) generates the next word in a sequence based on (2) extensive pretraining based on the collective written works of humanity, then (3) transforms multiple words simultaneously to generate meaning and context.

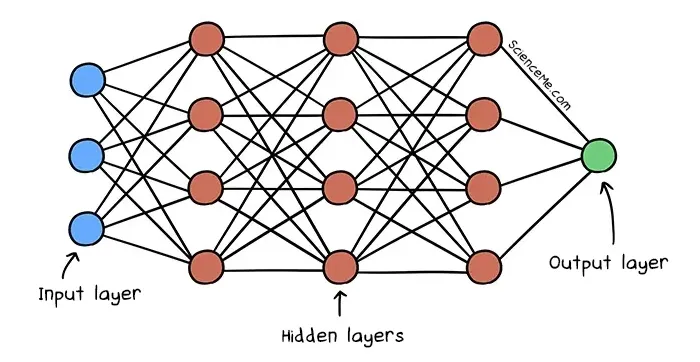

ChatGPT's transformer model uses an (artificial) neural network, much like the human brain. This structure allows both systems to generate a vast array of outputs, or thoughts, thanks to the immense number of unique connections between nodes. We can visualise a mini neural network like this:

The Input Layer

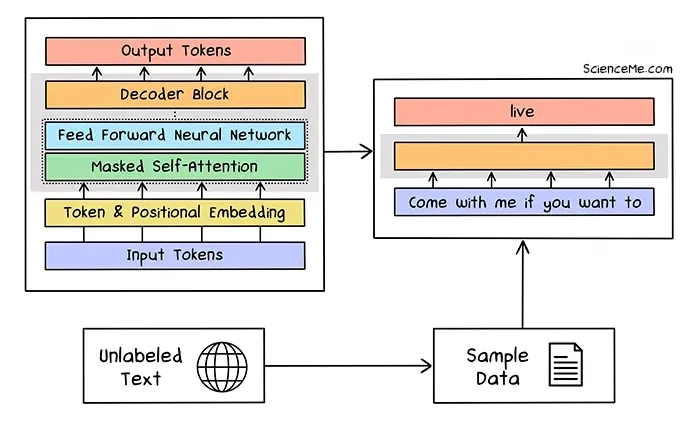

1) Tokenization breaks the input data down into the smallest meaningful bits, defined at the word-level, character-level, or subword-level. This extends the model to all spoken languages plus programming languages like Python and C++.

2) Positional Embedding maps the tokens to high-dimensional vectors so ChatGPT can understand the meaning of the words in sequence.

The Hidden Layers

3) Multi-Head Self-Attention weighs the importance of different words in the input sequence, enabling it to capture contextual relationships between words.

4) The Feed Forward Neural Network takes these representations and transforms them into higher-level features.

The Output Layer

5) The Decoder Structure generates a probability distribution over the vocabulary for the next token in the sequence.

6) The Softmax Function converts the raw scores into probabilities, making it possible to predict the next token in the sequence.

7) The Language Modelling Head selects the most likely next token based on the input and the context provided by the hidden layers.

Having trained on a huge volume of reference texts like books, articles, and websites, ChatGPT not only possesses an extensive map of virtually all human knowledge, but also the many languages we use to communicate it. It appears to know everything—and yet, technically, it comprehends nothing; despite being based on the circuitry of the human brain and trained on all our collective works, ChatGPT doesn't actually think like we do at all.

"My responses are generated based on statistical probabilities and patterns learned from data, rather than a deep understanding of language and meaning like humans possess." - ChatGPT-3

Is ChatGPT an AGI?

In 2023, Microsoft published a research paper that described ChatGPT-4 as having "sparks" of artificial general intelligence, accelerating us towards the singularity.

While current AI systems function within a limited set of parameters (like driving a car, writing a play, or analysing big data), artificial general intelligence or AGI is a hypothetical agent that can rival a human being on any intellectual task.

According to The Alignment Problem, both AIs and AGIs may inadvertently harm us despite being programmed to help us. For instance, an AGI tasked with reducing road deaths to zero could find any number of solutions. It could automate the rollout of more self-driving vehicles, or it could activate all the nukes on Earth—since you never programmed it to value low nuke death tolls over low road death tolls. You silly bean.

Large language models are now the most powerful AIs in existence today. And in a year, the bleeding edge of AI will be 5-10x more powerful as the models discover new ways to understand and reason with our inputs.

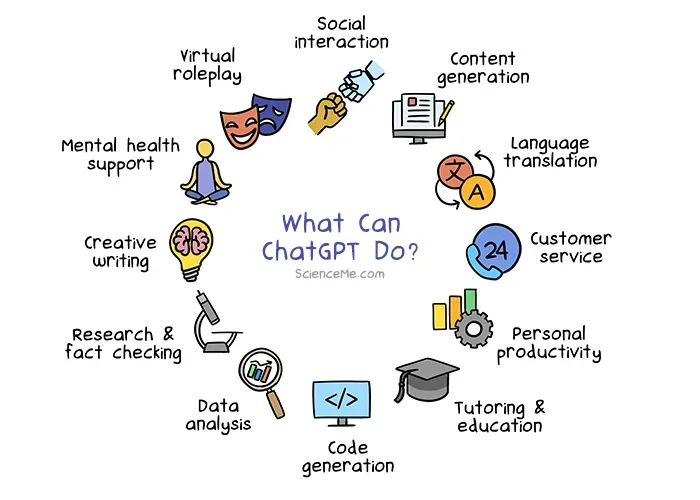

What Can ChatGPT Do?

ChatGPT has the striking ability to generate coherent, long-form responses to very specific questions. Indeed, you can have lengthy back-and-forth conversations from day to day while it remembers personal information you've told it before. (Go ahead and ask ChatGPT what it already knows about you.) This creates a vast range of applications, from writing computer code to developing fantasy novels.

ChatGPT also makes an excellent tutor: ask it to explain relativity in the manner of Rick Sanchez, evolution in the style of David Attenborough, or DNA in the voice of Science Me.

In the spirit of human-AI relations, I asked ChatGPT to list 12 things it can do, then I drew them in Procreate. Technically, I needn't have done this; there are already image generator AIs that use neural style transfer, meaning I can upload my previous illustrations and it draws new stuff for me. But where's the fun in that?

Final Thoughts

ChatGPT is a huge deal in the field of AI language processing. Developers are working to channel the power of LLMs into business tools to automate day-to-day tasks, such as customer support, data analysis, and workflow optimisation. While ChatGPT and its sisters make our work easier now (see Google's Gemini and Microsoft's ChatGPT-powered Copilot), ultimately their descendants will supplant us completely in many work roles. The question is, what will we do with our days when that happens?

Written and illustrated by Becky Casale. If you like this article, please share it with your friends. If you don't like it, why not torment your enemies by sharing it with them? While you're at it, subscribe to my email list and I'll send more science articles to your future self.