Science is more than test tubes and lab coats and Einstein's bad hair day. It's a systematic way of thinking we can use to decode the natural world.

To illustrate this, here are four fundamental canons of science brought to you by the first true scientists: philosophers.

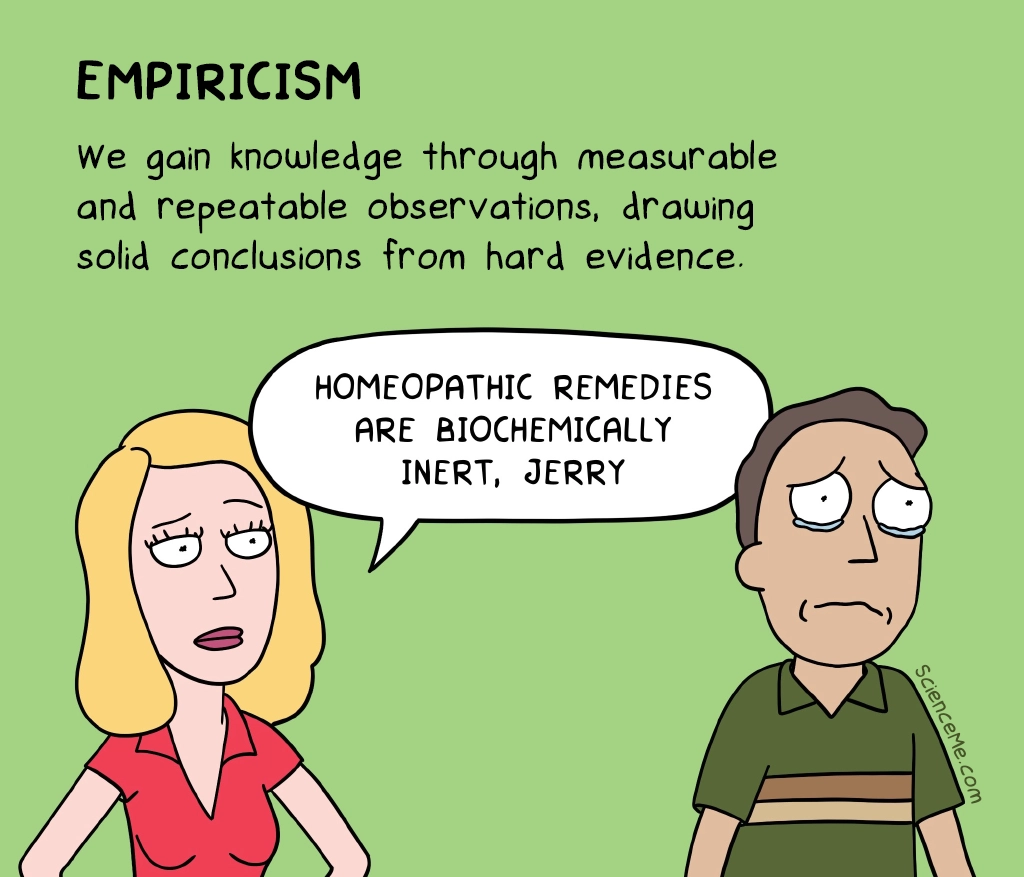

1. Empiricism

John Locke was a philosopher who argued that scientific truth must come from hard data. For most of history, scientific observations were made using our biological senses or, at best, basic instruments, which set limits on what we could actually know about the universe. Modern technology changed all that, with the likes of space telescopes and gene sequencers massively extending our data-gathering capacity. Today, we have a data explosion.

We can now probe reality at new scales and dimensions, imaging things invisible to the naked eye—like viruses, distant nebulae, and brain tumours in situ. Locke would be overjoyed by the sheer breadth and depth of empirical data we can generate.

Moreover, our technology delivers objective data, which is far more precise, scalable, and reliable than the subjective sensory data we perceive with our brains. Empiricism is so fundamental to the scientific method that we must be sceptical of the claims we can't rigorously test and measure—like ghosts, mediumship, and telepathy. If they're not reliably detectable, they fall into the domain of belief.

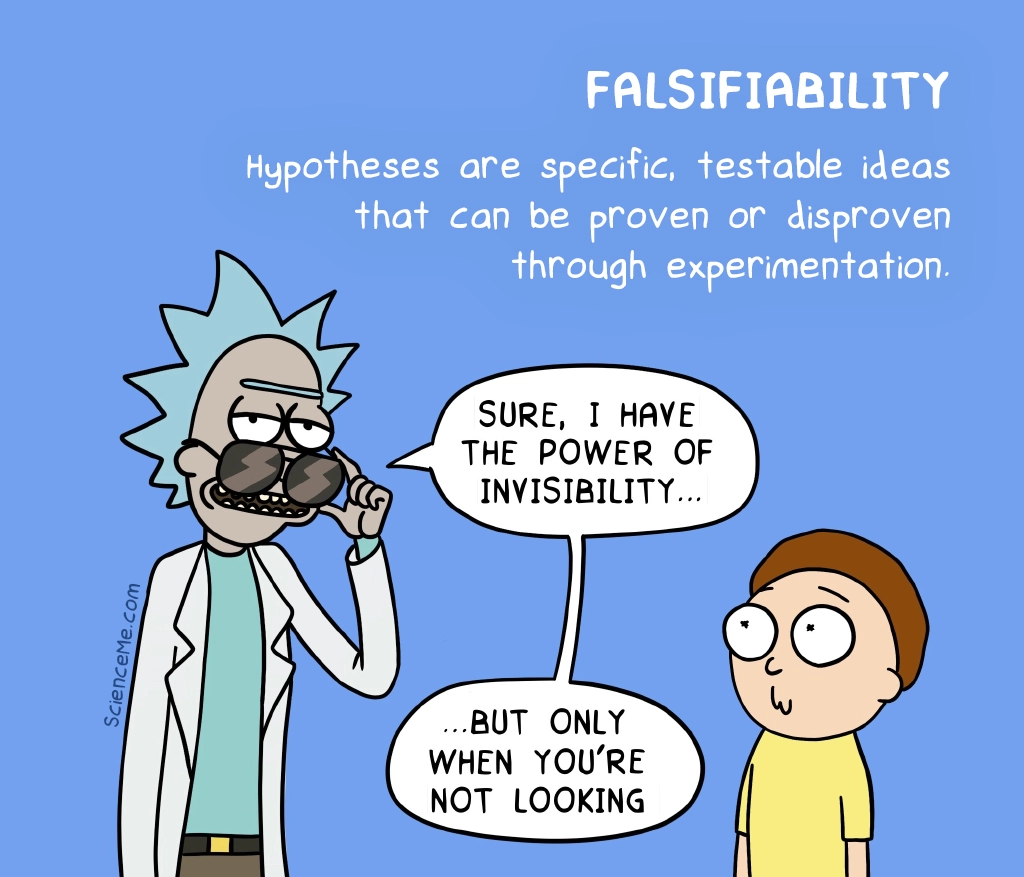

2. Falsifiability

In the year 240 BCE, most people believed the Earth was flat—and that's a fair hypothesis: a specific, testable claim. Being science-minded, Eratosthenes took this falsifiable hypothesis and designed an experiment to gauge the Earth's shape. His ingenious approach used shadows, geography, and maths to reveal our planet's spherical nature.

At its core, Eratosthenes began with a specific, falsifiable hypothesis—a concept about the world that, in principle, can be scrutinised to prove or disprove its validity. If an idea isn't falsifiable, it's a scientific cul-de-sac.

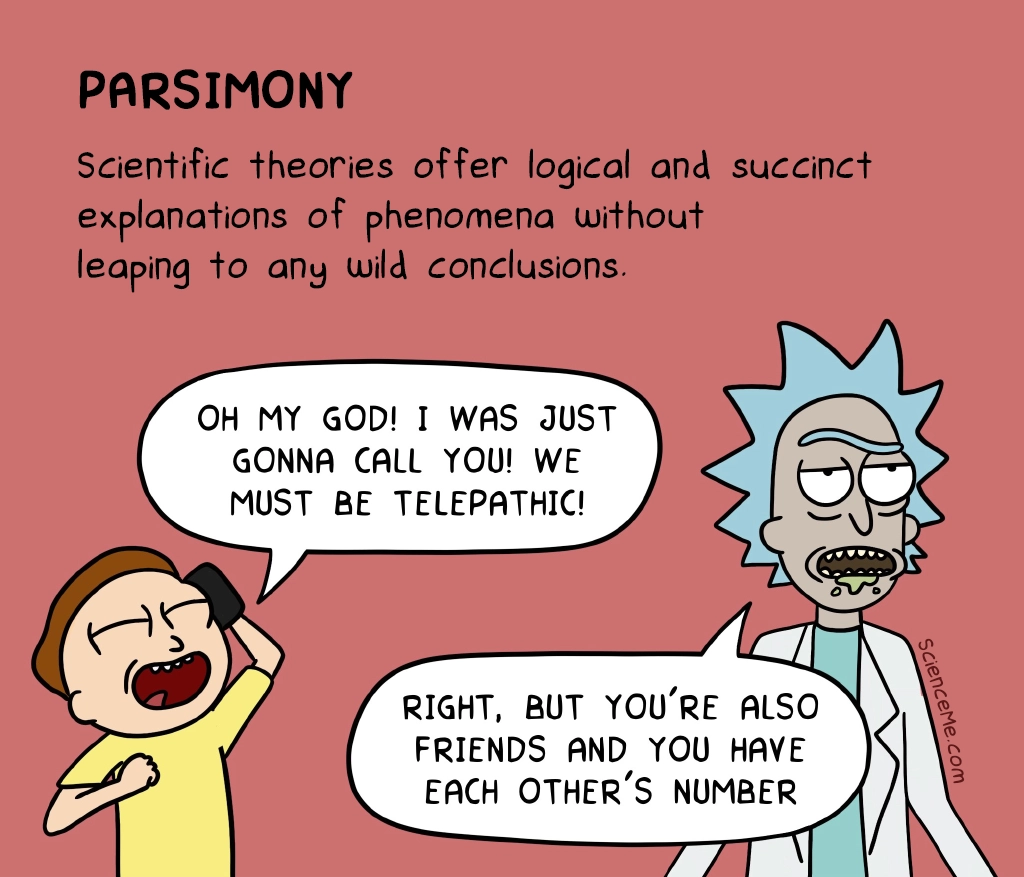

3. Parsimony

Humans are creative storytellers. We're capable of leaping from one fantasy to another to explain, entertain, and elucidate. But because our creative drive clashes with the need for parsimony in science, we must go out of our way to analyse and challenge our theoretical claims at every turn.

Parsimony, also known as Occam's Razor, states: "entities should not be multiplied beyond necessity". This means stripping any wild hypotheses out of the equation. It's not mythological demons making your wife speak in tongues; it's the clinically observed schizophrenia.

Conspiracy theories have a bad reputation because, by their very nature, they require extravagant assumptions. They conflict with the mainstream narrative and what we think we know to be true. History warns us conspiracies are plentiful, but sorting fact from fiction requires a testable hypothesis and empirical data before we can reach a parsimonious conclusion.

So what is parsimony? It's often misunderstood to mean simplicity. And yet Einstein's theory of general relativity isn't simple. Neither is Darwin's theory of evolution by natural selection. They are, however, the most parsimonious explanations of what we observe. They explain reality in the most succinct and reasonable way possible using only the evidence at hand.

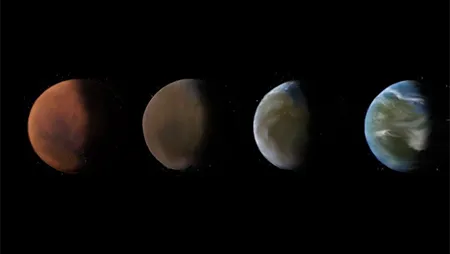

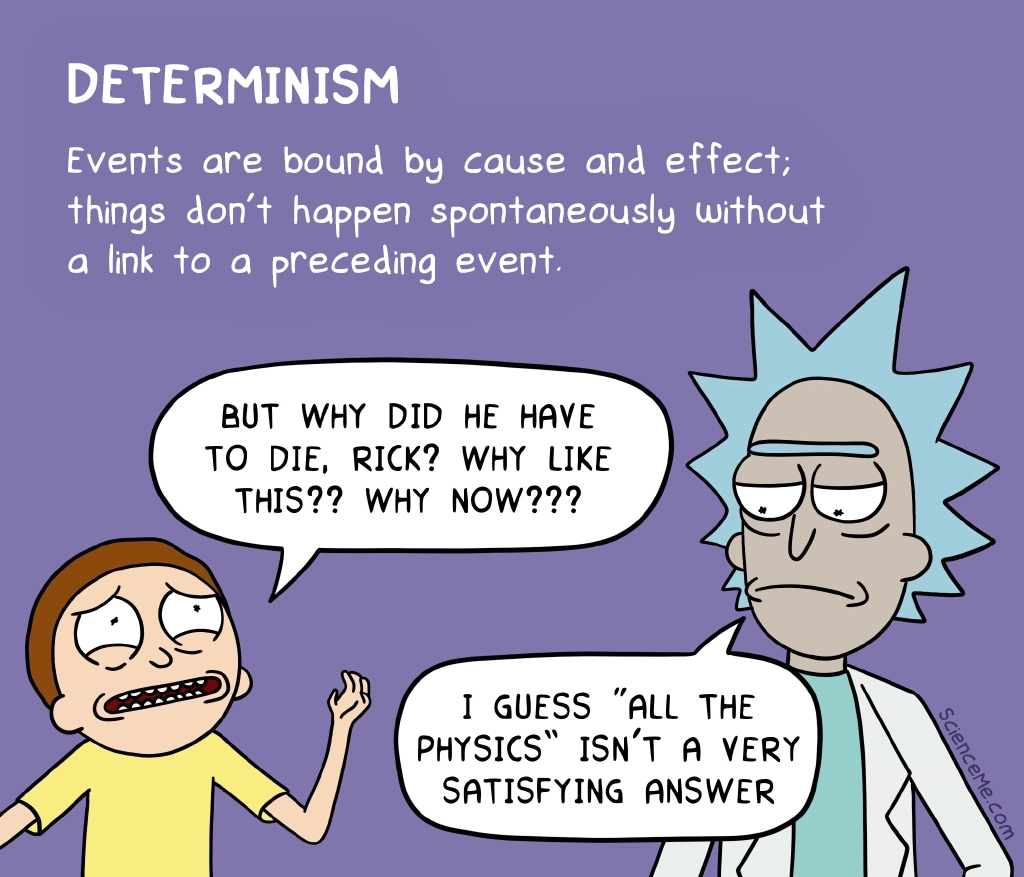

4. Determinism

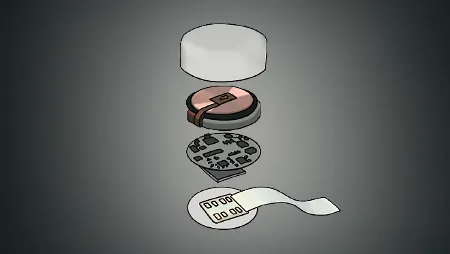

The natural world, as we observe it, is a complex web of cause and effect—from the centrifugal forces that shape galaxies to the molecular interactions between neurotransmitters in your brain. Scientific reductionism strives to analyse phenomena in terms of deterministic cause and effect, a principle that renders fate, karma, and angelic intervention moot.

Quantum physicists are wrestling with a big problem: the subatomic world is not deterministic. It appears probabilistic and random. This observation poos right in front of classical physics and then rubs its face in it. How can the universe be both both random and deterministic at the same time? This is the problem that haunted Einstein. Even today, we're missing a critical piece of the puzzle. Has anyone checked under the couch?

Fortunately, at the classical scale of light switches, bridges, and planets, determinism continues to drive our everyday world. Life goes on in a predictable fashion, despite the probabilistic quantum phenomena that underlie it. We can still accurately predict solar eclipses, sine waves, and even social dynamics.

The Never-Ending Mystery of Science

These fundamental principles drive our thinking at the foundation of science, on which many more principles are built. For instance, when a scientist proposes a new idea, her logic and experimental data are subjected to peer review. This adds another round of scrutiny, allowing fresh eyes to critique and evaluate the science with the relentless scepticism essential to the march of progress.

Peer review leverages collective intelligence. It's designed to protect against innocent errors in thinking as well as scientific fraud that can send research down the wrong path.

Revelatory research papers can drive funding into new scientific fields, spawning new predictions, new data, and new solutions. When ideas gather momentum, they allow science to hone in on the truth with increasing specificity. Ultimately, historic theories may be updated or even overhauled in light of new evidence, making science a continual error-checking process.

Yet our scientific knowledge will never be complete. To understand life, the universe, and everything would leave no room for curiosity. This is what drives scientists: the never-ending mystery of the universe. We can delight in the incremental rewards—the glimpses of deeper truth—each discovery bringing new insights into the beautiful and astonishing system we call nature.

Written and illustrated by Becky Casale. If you like this article, please share it with your friends. If you don't like it, why not torment your enemies by sharing it with them? While you're at it, subscribe to my email list and I'll send more science articles to your future self.